Listen to this blog

Hybrid AI: Where Precision Meets Possibility

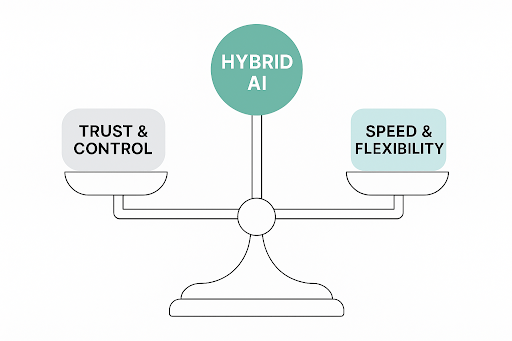

That’s the crux of the debate: deterministic AI models provide control. Probabilistic AI models offer creative power. Do you choose reliability or agility? Can a single enterprise AI strategy support both risk-averse compliance teams and fast-moving marketing or sales teams?

Increasingly, the answer is yes. And, the answer to this duality is called Hybrid AI.

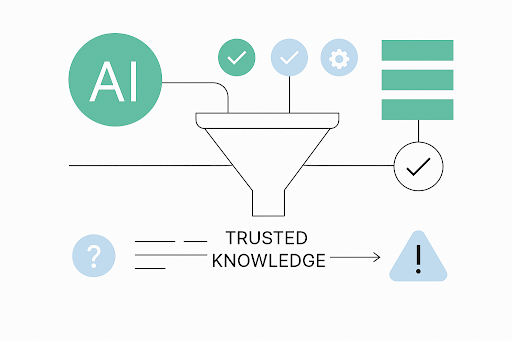

Hybrid AI is a Composite AI technology that combines both AI models. It starts with a deterministic engine like a Knowledge Graph AI. If a verified answer exists, it’s delivered instantly. No debate. No further questions. No power consumption of recreating the wheel.

Hybrid AI: Where Precision Meets Possibility

That’s the crux of the debate: deterministic AI models provide control. Probabilistic AI models offer creative power. Do you choose reliability or agility? Can a single enterprise AI strategy support both risk-averse compliance teams and fast-moving marketing or sales teams?

Increasingly, the answer is yes. And, the answer to this duality is called Hybrid AI.

Hybrid AI is a Composite AI technology that combines both AI models. It starts with a deterministic engine like a Knowledge Graph AI. If a verified answer exists, it’s delivered instantly. No debate. No further questions. No power consumption of recreating the wheel.

Listen to this blog

If the answer does not exist, nor is a similar one available, then the system invokes a probabilistic model. Here an LLM, constrained by a Retrieval-Augmented Generation (RAG) method, is used to answer the question or query. The key here is governance. In this case, the generative AI model is limited to a curated, internal Trusted Collection of documents (the RAG solution).

When the system generates a response, the user is informed of the potential errors and risk. On the other hand, if the answer came from the deterministic side, then it was pre-sanctioned by the organization, and is fully reliable (having been human expert vetted).

The result is safer, smarter AI – without sacrificing scale.

Why Enterprises Are Moving Toward Hybrid AI Models

According to Database Trends and Applications, 29% of companies report to having AI RAG solutions or are actively implementing them (DT&A, Jan 2025). This shift highlights a growing industry trend: AI models must be tailored, trusted, and traceable – especially in customer-facing or regulated environments. The fact that hallucinations are so common in LLM technologies (generative AI), makes this figure alarmingly low.

There’s also a strong economic case. Even a small error rate can wreak havoc at scale. A 2% inaccuracy rate can result in over 18,000 flawed interactions per year in a mid-sized enterprise, according to kama.ai’s 2025 Hybrid AI report (kama.ai, May 2025). That’s thousands of potential complaints, refunds, or legal escalations – not to mention reputational damage. With Hybrid AI, those risks are mitigated. Every interaction is anchored in approved knowledge and monitored by subject-matter experts.

Responsible AI Starts with the Right Model

The AI Model Debate Is Over. Hybrid Wins.

As the use of AI models explodes across industries, one key debate continues to dominate enterprise strategy. This being whether organizations should rely on deterministic or probabilistic AI models. These two types of AI technology offer distinctly different benefits and come with equally distinct risks. The good news? A clear resolution has emerged.

Strengths and Limits of Deterministic AI Models

Start with deterministic AI models. These models are rule-based and built on structured logic. These are Knowledge Graph AI systems. The output is always consistent, governed, and fully explainable. That makes deterministic AI models ideal for compliance-heavy industries where accuracy, auditability, and brand safety are critical. Frankly, this describes any and all enterprise businesses. If a brand must ensure the same answer is given every time – and that answer must be 100% verified as correct – deterministic AI delivers. But, it comes with limits. These AI models can be perceived as inflexible in open-ended scenarios, they are built NOT to “guess” (no hallucinations here), and need upfront effort to build and maintain. In other words, humans must always be in the loop to sanction answers – when high risk scenarios or brand issues are on the table.

Can Probabilistic AI Models Be Trusted Blindly?

By contrast, probabilistic AI models, such as large language models (LLMs), are designed for adaptability. These models generate natural-sounding responses by identifying patterns in massive datasets. They are powerful tools for summarizing content, automating writing, or answering broad questions. But they often get it wrong. A recent McKinsey report found that 47% of organizations have already experienced negative consequences from using GenAI models, compared with 44 percent in early 2024. (McKinsey, July 2024). – This includes hallucinated answers or intellectual property risks. Risks like these are often invisible until they’ve caused damage, which is the real danger.

By contrast, probabilistic AI models, such as large language models (LLMs), are designed for adaptability. These models generate natural-sounding responses by identifying patterns in massive datasets. They are powerful tools for summarizing content, automating writing, or answering broad questions. But they often get it wrong. A recent McKinsey report found that 47% of organizations have already experienced negative consequences from using GenAI models, compared with 44 percent in early 2024. (McKinsey, July 2024). – This includes hallucinated answers or intellectual property risks. Risks like these are often invisible until they’ve caused damage, which is the real danger.

At kama.ai, we’ve built our platform around this approach. Our Trustworthy Hybrid AI architecture ensures the right AI model is used for the right task – deterministic where precision is required, probabilistic where agility is valued. All of it is governed, auditable, and brand-safe by design.

If you’re looking to deploy AI models that scale trust and intelligence together, now is the time to act. Hybrid AI gives enterprises the control they need with the power they want. It’s not just the future of AI – it’s the standard for responsible AI deployment.

Download the full Hybrid AI Agent Guide to explore how your business can unlock the best of both AI models:

https://kama.ai/download-ebook-trustworthy-hybrid-ai/