Listen to this blog

Why Every Corporate AI Needs a Human in the Loop

To simply say that ‘AI is reshaping business – fast’ is an understatement. It powers virtual agents, content creation, search tools, and decision engines. But it is not infallible. It makes mistakes. Sometimes very big mistakes. And, as organizations race to adopt automation, one theme resonates. AI without oversight can damage trust, cost money, and break compliance.

That’s why every corporate AI system today needs one thing above all else: a human in the loop.

Why Every Corporate AI Needs a Human in the Loop

To simply say that ‘AI is reshaping business – fast’ is an understatement. It powers virtual agents, content creation, search tools, and decision engines. But it is not infallible. It makes mistakes. Sometimes very big mistakes. And, as organizations race to adopt automation, one theme resonates. AI without oversight can damage trust, cost money, and break compliance.

That’s why every corporate AI system today needs one thing above all else: a human in the loop.

Listen to this blog

What “Human in the Loop” Really Means

“Human in the loop” means keeping people involved in every step of the AI process. It doesn’t just apply to training datasets. It means giving experts the ability to oversee, validate, and correct AI in real time.

It doesn’t mean that humans need to oversee every step. That would completely negate the benefits of this revolutionary technology. Rather, humans need to evaluate, and oversee the processes. They need to bless and sanction directions and high level decisions. That way, things don’t just take place without conscious consideration. That way there is a human conscience in the process decision making. After that, the system can automate, and churn through the high volumes that drive the productivity gains we need.

In this model, AI proposes answers. But a human has the final say, and right to review and adjust. This is essential in brand-sensitive or regulated contexts like customer service, compliance, or healthcare. It ensures automation works with people, not around them.

Where Human Oversight Matters Most

Adding human oversight delivers real value in key business functions:

- Customer Support: A chatbot that hallucinates or misreads a complaint, can erode trust. A human in the loop can manage unclear or sensitive requests like these.

- Marketing and Content: GenAI can generate text. Yet, it can’t always capture tone, values, or brand nuance. Human review avoids tone-deaf messaging, odd wording, and factual errors.

- Knowledge Management: When users ask for key information, answers must be correct. Human oversight ensures the right organizational documents are referenced and useable by the AI to help provide the right information. This ensures responses are brand-safe and accurate.

The risks are real. According to the 2023 Harvard Business Review, 42% of companies using AI lack formal governance. No guardrails. No oversight. No visibility into outcomes.

Not only is that a scary scenario… it is a clear problem waiting to happen.

Hybrid AI Agents: Designed for Oversight

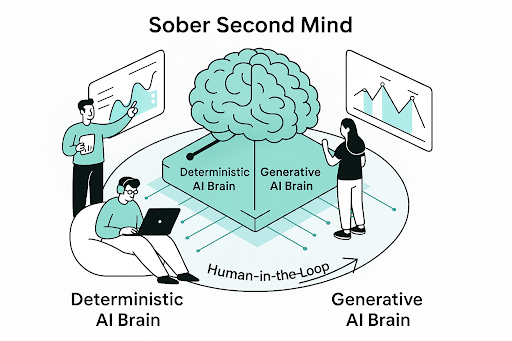

The Hybrid AI Agent model addresses this challenge. It combines deterministic Knowledge Graph AI with Generative AI in a layered structure. The system always searches a vetted knowledge base first. If no answer is found, it turns to Generative AI – within a Trusted Collection of approved content.

The Hybrid AI Agent model addresses this challenge. It combines deterministic Knowledge Graph AI with Generative AI in a layered structure. The system always searches a vetted knowledge base first. If no answer is found, it turns to Generative AI – within a Trusted Collection of approved content.

If an answer still isn’t clear, the system flags it as “generated” to alert the users. Ultimately it will have been generated based on the safe content provided to it, but it is still subject to error.

This is the essence of kama.ai’s GenAI Sober Second Mind® model. This is a control layer that determines whether to use deterministic or generative logic. It keeps sensitive interactions within trusted guardrails and allows businesses to set clear boundaries.

Three Levels of Control

Hybrid AI Agents support three operational modes, tailored to risk levels:

- Knowledge Graph Mode: Pulls from pre-approved answers. Ideal for compliance and customer service. These are fully human vetted answers that are sanctioned and brand safe.

- Trusted GenAI Mode: Uses generative tools with vetted data (Trusted Content). It can be useful for onboarding, help desks, or internal queries.

- Open GenAI Mode: Leverages broad generative capabilities. Best for creative or internal brainstorming. Definitely not suggested for external customer facing applications, as it is subject all the challenges of current LLM technologies (large language models).

The first two of these modes supports the human-in-the-loop model. As such, these are the ideal two solutions recommended for organizational use.

Why Business Leaders Must Engage

For CEOs, AI governance protects the brand. For CMOs, it keeps messaging on track. For Customer Success leaders, it delivers speed without sacrificing accuracy.

And the trend is clear. Gartner (2024) predicts that by 2026, more than 75% of businesses using AI will need a formal AI governance frameworks. Hybrid AI Agents meet this demand by building oversight into their design.

A human in the loop isn’t a bottleneck. It’s a safeguard. It doesn’t slow down AI. It makes scaling AI solutions, safe.

As kama.ai CEO Brian Ritchie says: “The world does not need faster AI. It needs AI that is smarter, safer, and trustworthy.”

Trust People. Empower AI.

Automation is powerful. It will dramatically improve our corporate productivity. But it’s only as safe as the human controls around it. Human in the loop strategies ensure AI stays accurate, ethical, and brand-safe.

If you’re rolling out AI tools, ask: Where’s the human? Because once a machine speaks for your business, you own what it says. Talk with a kama.ai expert on what to consider for your next AI project.

Want more insight?

Read our free ebook Hybrid AI Agents. Learn how to build responsible AI with trust, accuracy, and control. No forms. No spam. Just smart thinking.