Listen to this blog

What Are Hybrid AI Agents?

Hybrid AI Agents combine two powerful AI solutions: deterministic Knowledge Graph AIs and Generative AI technologies. This hybrid structure gives businesses a flexible, accurate, and safe AI toolset. These agents are designed to offer fast help using a Trusted Collection of referenceable material to provide accurate, verified answers when it’s critical.

Hybrid AI Agents work in a layered way. First, they search for answers in a vetted and fully approved knowledge base. If nothing is found, they move to Trusted Collections powered by GenAI. This means even when an exact answer isn’t available, the system can generate one. This while making it clear that it may not be a brand-sanctioned response.

Using a layered structure gives organizations more control over the answers the system provides. This for both external and internal applications.

What Are Hybrid AI Agents?

Hybrid AI Agents combine two powerful AI solutions: deterministic Knowledge Graph AIs and Generative AI technologies. This hybrid structure gives businesses a flexible, accurate, and safe AI toolset. These agents are designed to offer fast help using a Trusted Collection of referenceable material to provide accurate, verified answers when it’s critical.

Hybrid AI Agents work in a layered way. First, they search for answers in a vetted and fully approved knowledge base. If nothing is found, they move to Trusted Collections powered by GenAI. This means even when an exact answer isn’t available, the system can generate one. This while making it clear that it may not be a brand-sanctioned response.

Using a layered structure gives organizations more control over the answers the system provides. This for both external and internal applications.

Listen to this blog

GenAI’s Sober Second Mind®

GenAI is powerful but unpredictable. It can deliver quick answers, but not always accurate ones. That’s why kama.ai introduced GenAI’s Sober Second Mind®. It’s a control layer that determines when to use deterministic reasoning and when to leverage generative capabilities.

Deterministic answers are fully sanctioned and vetted in advance by knowledge managers (KM) or subject matter experts (SMEs). Even when using GenAI, kama.ai ensures responses stay within guardrails. The Trusted Collections are the guardrails as a Retrieval Augmented Generation (RAG) framework.

With this setup, teams reduce risk but keep their flexibility. The Trusted Collection includes only documents and data selected by the KM. At kama.ai, managing this collection is simple – thanks to a user-friendly, drag-and-drop interface.

As a result, businesses stay in control. For fast, flexible answers, use GenAI with a Trusted Collection. For precise, verified responses, use deterministic Knowledge Graph AI. Organizations can set their boundaries based on context, sensitivity, or audience.

Why Hybrid AI Agents Matter for Enterprises

AI technologies today face serious issues – hallucinations, biases, and poisoned data in large language models (LLMs). Meanwhile, enterprises are under pressure to deploy AI responsibly. Customers expect speed – but also demand accuracy. In regulated industries, one mistake can be costly.

Oxford University (2024) reports hallucinations in 58% of LLM outputs. That poses real brand risk. Even though the LLMs are improving, these challenges persist. Hybrid AI Agents are built to avoid these challenges. Their automation includes built-in guardrails and human oversight, resulting in fewer errors and more trustworthy interactions.

In the most recent kama.ai’s ebook, even a 2% AI error rate at enterprise scale could cost $1.2M annually. These costs stem from legal issues, customer complaints, and workflow rework. That’s why upfront accuracy matters.

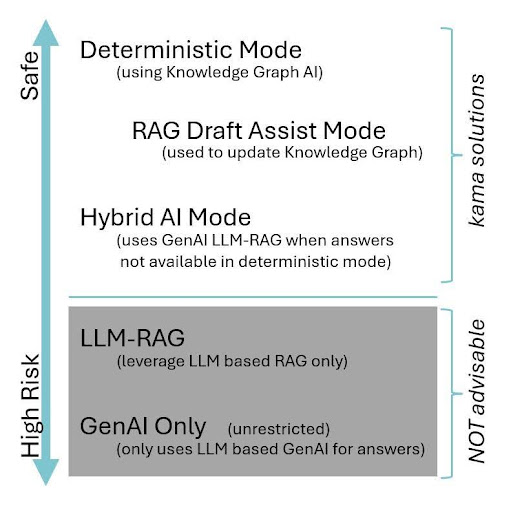

A Risk-Control Framework

Hybrid AI Agents support different levels of control based on the user’s context:

- Knowledge Graph Mode: Pulls answers only from pre-approved content. Best for low-risk scenarios.

- Trusted GenAI Mode: Generates responses using vetted sources in the Trusted Collection. Use when speed is important but accuracy remains critical.

- Open GenAI Mode: Leverages broader GenAI tools without direct oversight. Higher risk but valuable in creative, low-risk environments.

Enterprises can match the mode to the interaction. Customer-facing roles should stick to deterministic Knowledge Graph Mode. Internal use or brainstorming may allow for Trusted GenAI Mode. This adaptability makes Hybrid AI Agents highly valuable.

Built for Business Safety and Control

Hybrid AI Agents go beyond typical chat tools. They are designed with feedback loops, oversight, and governance. This makes every answer trackable, auditable, and safe.

A human-in-the-loop approach allows experts to guide content development, monitor outputs, and manage updates. Teams can avoid nonsense or off-brand responses.

Deloitte’s 2024 report shows that AI with human oversight cuts bias incidents by 47%. Studies consistently show that hybrid systems deliver the best outcomes. This is not only smart – it builds trust. Zendesk’s 2025 report found 63% of customers are concerned about AI bias. Hybrid AI Agents help address that concern.

Why Now?

The rise of AI tools, bots, and platforms is speeding up. But with this speed comes risk. Hybrid AI Agents help enterprises scale safely. They provide agility without losing trust – and speed without ignoring ethics.

As kama.ai’s CEO Brian Ritchie puts it, “The world does not need faster AI. It needs AI that is smarter, safer, and trustworthy.”

Hybrid AI Agents are how we get there.

If your organization is exploring AI options, consider Responsible AI technologies like Hybrid AI Agents. For a no-pressure review of your needs and options, get in touch.

Want to Learn More?

Download the free ebook Hybrid AI Agents to explore the full model, complete with charts, use cases, and examples. No forms. No strings attached.