Listen to this blog

Reducing AI Hallucinations: Why Human Oversight is Key

AI technology is transforming how businesses engage with customers. Customers expect higher levels of service quality from an AI bot interacting with them, rather than the old days of waiting for a human agent to respond. No more patience with queueing. However, this also brings a higher level of brand danger. AI hallucinations often provide confident but incorrect answers. This poses a growing concern, especially around eroding your brand trust.

Large Language Models (LLMs) like ChatGPT generate responses that sound believable but often lack factual accuracy. For businesses, this issue can cause misinformation, erode trust, and flat-out damage your brand’s reputation.

How can businesses prevent these risks? The answer lies in using the right AI technology for the right purposes. For customer facing tasks like a website’s chatbot, using Knowledge Graph AI technology with human oversight – is the right combination. It ensures AI delivers accurate, reliable, and on-brand information. This is what we suggest as the modern AI Agent, which uses Responsible AI technologies and processes to get it right.

What Are AI Hallucinations?

AI hallucinations occur when generative AI models produce answers that are plausible but untrue. This happens because LLMs rely on patterns in language, not verified facts. While they excel at creativity and natural conversation, this approach fails to provide accuracy.

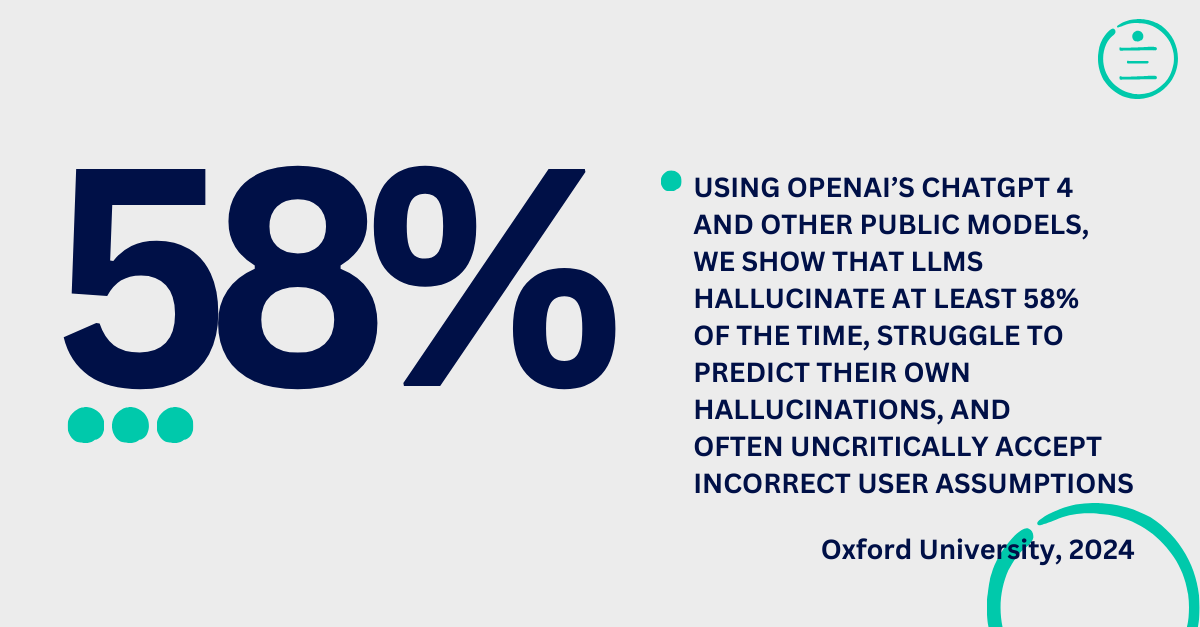

A 2024 study by Oxford University found that “LLMs hallucinate 58% of the time.” For businesses, these errors are not trivial. A single incorrect response can mislead customers, reduce confidence, and harm your brand’s credibility. Push this even further. Think of a large enterprise like a big bank. They may have 10,000 chatbot inquiries every day. Using a straight connection to an LLM AI, means it might provide 5,800 incorrect answers per day. After a month, what are the chances that some of more comically incorrect answers will show up on social media posts, from angry or bewildered clients? What would this do to that banks’ reputation and image?

A 2024 study by Oxford University found that “LLMs hallucinate 58% of the time.” For businesses, these errors are not trivial. A single incorrect response can mislead customers, reduce confidence, and harm your brand’s credibility. Push this even further. Think of a large enterprise like a big bank. They may have 10,000 chatbot inquiries every day. Using a straight connection to an LLM AI, means it might provide 5,800 incorrect answers per day. After a month, what are the chances that some of more comically incorrect answers will show up on social media posts, from angry or bewildered clients? What would this do to that banks’ reputation and image?

For customer-facing AI, accuracy isn’t optional. Your virtual agents need to deliver answers that are both fast and factually correct. This is where Knowledge Graph AI technology outshines LLMs.

Why Knowledge Graph AI Reduces AI Hallucinations

Knowledge Graph AI solves the challenges posed by traditional LLMs. Instead of generating answers based on language patterns, it retrieves factual information from structured, pre-verified sources.

Unlike LLMs, Knowledge Graph AI does not hallucinate because it pulls data directly from approved repositories. It doesn’t guess—it retrieves.

From the whitepaper Knowledge Management in the Virtual Agent Era, “We need the accuracy, scalability, and performance of knowledge graph databases combined with conversational access.” This method ensures AI provides reliable, contextually accurate information while staying aligned with your business goals.

Key benefits of Knowledge Graph AI:

- Fact-Based Accuracy: Answers are rooted in pre-approved, verified data.

- Full Transparency: You know where each response comes from.

- Consistent Results: AI delivers the same reliable answers every time.

For businesses, this means fewer errors, improved trust, and AI systems that enhance your brand’s reputation instead of risking it.

Adding Human Oversight: A Safety Net for Accuracy

While Knowledge Graph AI eliminates hallucinations, human oversight adds an important layer of trust and quality assurance. At kama.ai the highest level of safety uses the Sober Second Mind® approach—where humans validate AI responses to ensure they meet the highest standards of accuracy and brand alignment.

Here’s how human oversight enhances AI performance:

- Pre-Validation: Experts select and pre-approve the data used by the Knowledge Graph AI.

- Ongoing Monitoring: Human agents review critical AI responses to catch inconsistencies or gaps.

- Complex Queries: If a question is too complex, the AI agent seamlessly hands it off to a live human agent for resolution.

This human-in-the-loop approach blends AI’s speed and efficiency with human judgment. It guarantees that customer interactions are accurate, safe, and on-brand.

Protecting Your Brand with Reliable AI

Deploying AI means placing your brand’s reputation on the line. If a virtual agent delivers incorrect or inappropriate answers, the damage to customer trust can be significant. Building AI systems that prioritize accuracy is essential to protecting your business.

At kama.ai, our solutions combine Knowledge Graph AI with human oversight to ensure reliability. Here’s what you can expect:

- Verified Information: AI only pulls answers from trusted, fact-checked sources.

- Aligned with Brand Values: Responses reflect your organization’s voice and principles.

- Risk Reduction: Human oversight acts as a final filter for complex or sensitive matters.

The result is AI you can trust to deliver correct answers while enhancing customer satisfaction and strengthening your brand.

The Value of Human-Centered AI

AI should empower, not replace, human capabilities. It should take away the mundane tasks that human agents must do today. This allows them to focus on more directive (oversight), strategic, and complex projects, instead. By embedding human oversight into AI systems, businesses create solutions that are both efficient and trustworthy. The whitepaper underscores this idea: “AI must serve people first and foremost.”

For companies looking to reduce AI hallucinations, Knowledge Graph AI is a powerful alternative to traditional LLMs. It aligns AI performance with human values, minimizes errors, and builds trust with every customer interaction.

Conclusion: Eliminating AI Hallucinations with Trustworthy AI

Reducing AI hallucinations is critical to delivering reliable and responsible AI systems. While LLMs excel in certain areas, their inability to guarantee factual accuracy makes them risky for customer-facing roles.

By leveraging Knowledge Graph AI and human oversight, businesses gain a safer, more dependable solution. This combination ensures your AI delivers accurate, trustworthy responses that protect your brand and build customer confidence.

At kama.ai, we prioritize trust and reliability in every AI solution we create. With Knowledge Graph AI, we help businesses eliminate AI hallucinations, improve customer satisfaction, and confidently navigate the future of AI.

To learn more about reducing risks and delivering reliable AI, download our white paper Knowledge Management in the Virtual Agent Era. No strings attached—just insights that make your AI smarter and safer.