The Pilot Illusion

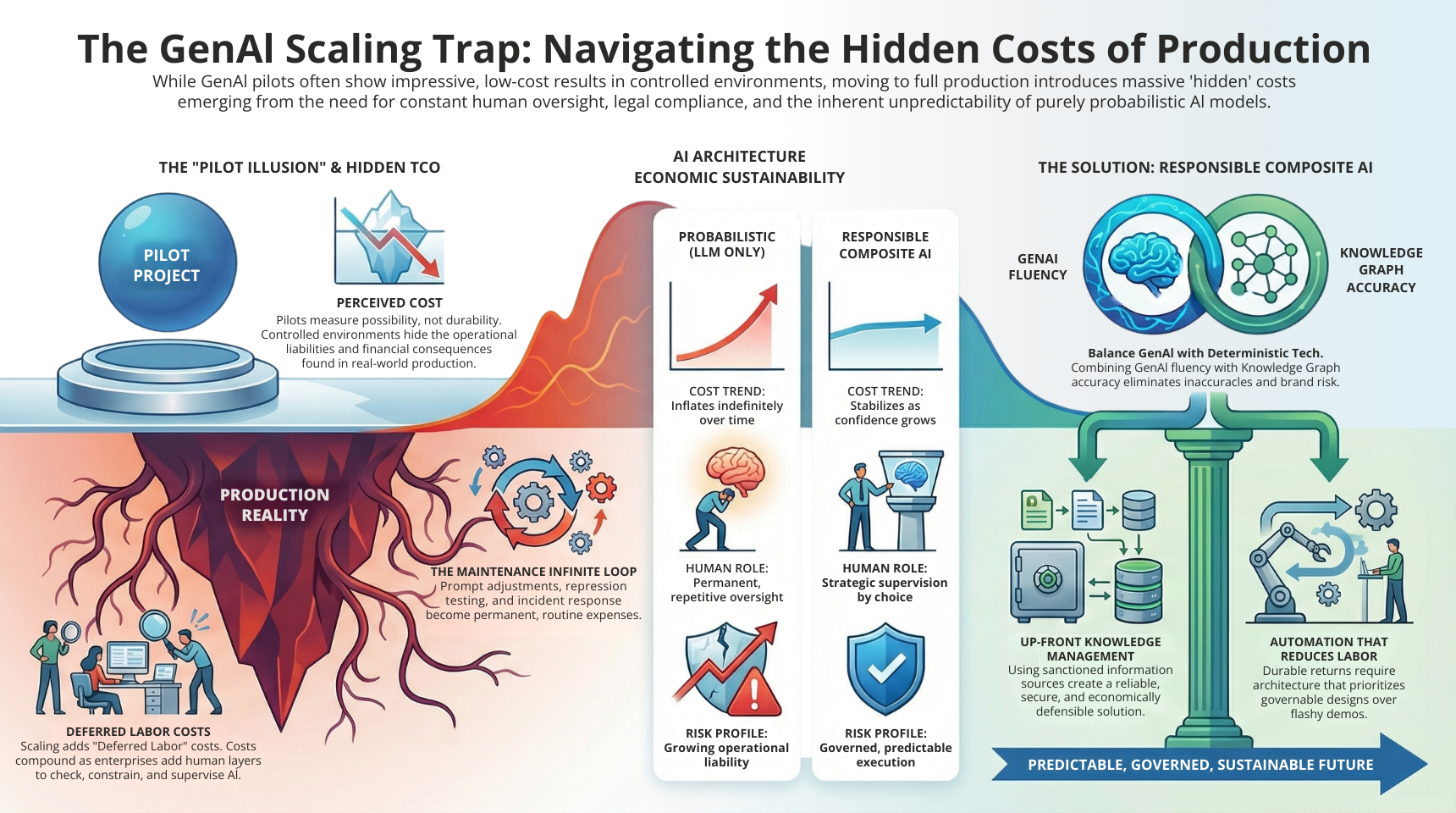

Like many projects, a new generative AI initiative always starts off with a sense of optimism and confidence. Teams launch pilots quickly and demonstrate impressive capabilities. Early results feel productive, fast, and relatively inexpensive. Executives see momentum and expect scalable enterprise value.

Pilots are designed to succeed by definition. They operate in controlled environments with limited operational exposure. Outputs rarely trigger real decisions or irreversible actions. Mistakes feel acceptable because consequences remain distant.

This is where expectations quietly diverge from reality. Pilots measure possibility rather than durability. They showcase fluency instead of accountability. Cost assumptions form before risk appears. That gap becomes much more expensive later in the progress of the actual project. This is where considering your risk profile, and whether you have the right AI Agent technology is a wise step.

The Pilot Illusion

Like many projects, a new generative AI initiative always starts off with a sense of optimism and confidence. Teams launch pilots quickly and demonstrate impressive capabilities. Early results feel productive, fast, and relatively inexpensive. Executives see momentum and expect scalable enterprise value.

Pilots are designed to succeed by definition. They operate in controlled environments with limited operational exposure. Outputs rarely trigger real decisions or irreversible actions. Mistakes feel acceptable because consequences remain distant.

This is where expectations quietly diverge from reality. Pilots measure possibility rather than durability. They showcase fluency instead of accountability. Cost assumptions form before risk appears. That gap becomes much more expensive later in the progress of the actual project. This is where considering your risk profile, and whether you have the right AI Agent technology is a wise step.

Production Introduces Accountability

Production environments change everything immediately. AI outputs now influence customers, employees, and regulated workflows. Errors stop being academic experiments. They become operational liabilities with financial consequences. Hallucinations and sourcing information from unsavoury sites quickly becomes a brand and reputational risk.

Accuracy expectations rise sharply. Legal teams become involved quickly. Compliance reviews expand across workflows. Brand risk enters every conversation. Oversight grows rather than shrinking.

Costs follow responsibility. Each safeguard adds process and labour. Each review step adds delay and expense. What appeared efficient now feels fragile. The operating model strains under real conditions.

Where Total Cost of Ownership Actually Emerges

Most GenAI costs do not appear on pricing sheets. They emerge inside operations and governance layers. Probabilistic systems become troublesome because they need constant human oversight and supervision. Human validation becomes permanent, and inadvertently repetitive rather than transitional.

As usage scales, variability scales alongside it. Enterprises respond by adding controls and approvals. Each control introduces friction and expense. Costs compound rather than stabilize over time.

The system never settles. Prompt adjustments continue indefinitely. Regression testing follows every model update. Incident handling becomes routine. Total cost grows silently quarter after quarter.

Why Purely Probabilistic Architectures Inflate Costs

Probabilistic systems never converge to certainty. It becomes extremely difficult to keep a LLM (large language model) based AI from staying within the guardrails. Ultimately, companies end up adding layers of AI to check and constrain, killing off most of the initially imagined benefits. Enterprises compensate by adding people and more processes. Complexity increases faster than delivered value.

Attempts at creating agentic designs simply amplify this problem. Autonomous actions demand authorization, rollback, and traceability. Wrong actions carry higher costs than wrong answers. Risk accelerates faster than productivity gains.

Hidden cost drivers typically include

- Continual and duplicitous human review and escalation

- Prompt maintenance and retraining cycles

- Regression testing after model updates

- Legal, compliance, and audit preparation

- Incident response and brand remediation

Each cost persists indefinitely. None decline with scale alone. Oversight remains necessary because unpredictability remains embedded.

Start with Responsible Composite AI to lower TCO

Sustainable AI economics need predictability by design. Deterministic systems reduce variability inherently. Governed execution limits supervision needs. Costs stabilize as confidence grows.

Responsible Composite AI Agents are a good answer to these problems, and provide balance. For example GenAI’s Sober Second Mind provides a rapid deployment (implementation) platform that gives you the benefits of Generative AI with the accuracy of deterministic knowledge graph AI technologies. It involved human-in-the-loop workflows up-front. It requires AI knowledge managers to aggregate and manage the company’s sanctioned information sources. In return, it means developing one system, one approach, and one workflow. And it gives the enterprise a reliable, secure, solution that can all but remove inaccuracies, and brand risk from your AI Agent solution.

This balance changes the economics fundamentally. Humans supervise by choice, not by necessity (from omission). Automation reduces labor rather than deferring it. Value compounds instead of eroding.

If AI requires increasing oversight, it is not automation. It is deferred labor at scale. Enterprises seeking durable returns need to prioritize getting the right architecture in place over novelty and flashy demos.

Enterprise teams need to ask whether the AI solution in question is economically defensible, governable, and safe at scale. As AI systems move closer to real decisions and real actions, architecture defines whether the project provides a positive ROI or becomes a growing liability. The organizations that succeed will not be those that ran the most pilots, but those that choose designs that reduce risk, stabilize costs, and earn trust over time.

If your GenAI pilot looked promising but production economics now feel fragile, it may be time to reassess the foundation. kama.ai works with enterprises to evaluate AI risk profiles, governance requirements, and total cost of ownership before problems compound. A short, practical discussion can clarify whether your current approach will scale safely, or quietly inflate costs over time. Let’s talk.