Listen to this blog

Shifting from Chat to Actions

AI’s journey has moved at an astonishing pace. In just five years, we’ve gone from AI that answers questions to AI that performs actions. GenAI tools like ChatGPT sparked excitement with their human-like fluency, but this phase is already evolving. The next frontier? AI that manages complex, multi-step tasks – at scale, within enterprises.

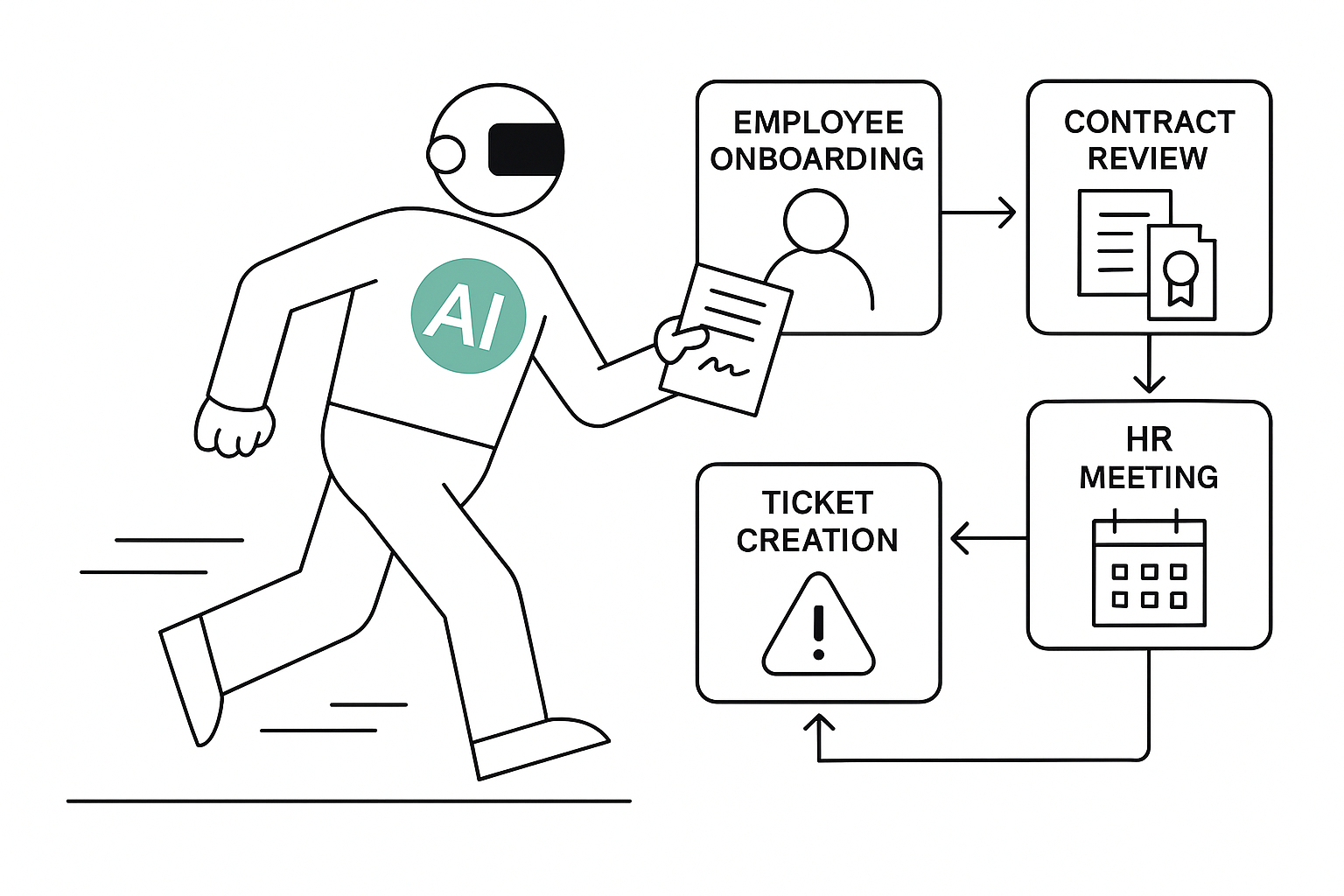

These aren’t trivial interactions. We’re talking about AI agents onboarding employees. They can draft legal clauses, resolve billing disputes, and even orchestrate HR meetings autonomously. The goal isn’t just to reply – it’s to take an action. And when machines start acting on our behalf, one thing becomes clear: trust needs to lead the way.

Shifting from Chat to Actions

AI’s journey has moved at an astonishing pace. In just five years, we’ve gone from AI that answers questions to AI that performs actions. GenAI tools like ChatGPT sparked excitement with their human-like fluency, but this phase is already evolving. The next frontier? AI that manages complex, multi-step tasks – at scale, within enterprises.

These aren’t trivial interactions. We’re talking about AI agents onboarding employees. They can draft legal clauses, resolve billing disputes, and even orchestrate HR meetings autonomously. The goal isn’t just to reply – it’s to take an action. And when machines start acting on our behalf, one thing becomes clear: trust needs to lead the way.

Listen to this blog

But GenAI Alone Isn’t Enough

But GenAI Alone Isn’t Enough

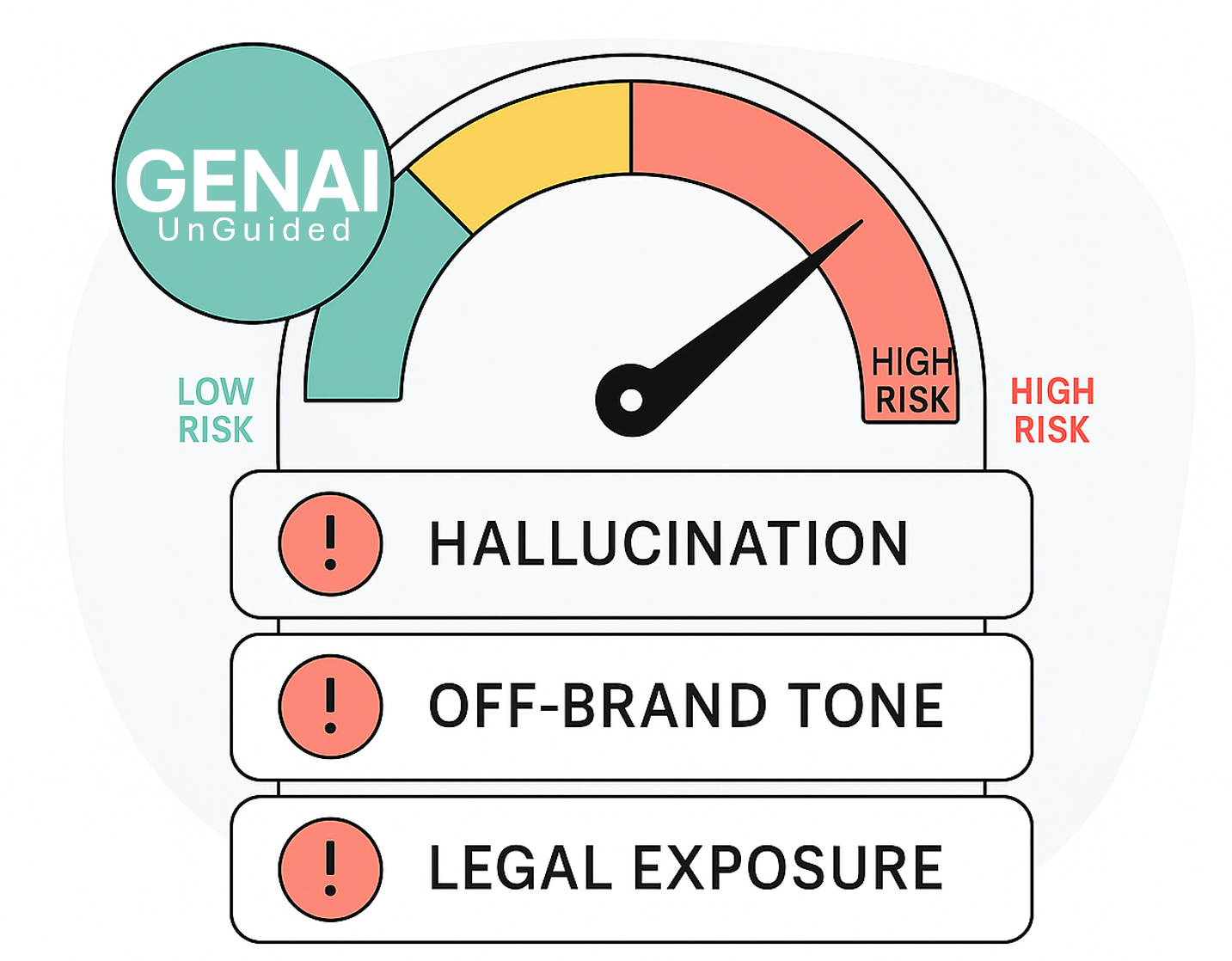

As exciting as GenAI is, it comes with significant risks. A 2024 Oxford study showed that ChatGPT 4 hallucinated in 58% of test queries. McKinsey’s 2025 State of AI report revealed 47% of companies experienced consequences from faulty GenAI outputs. These are not edge cases. They’re indicators of a structural issue. AI has a tendency to be confident, fluent, but often wrong.

Enterprises have learned the hard way: just because an AI sounds right doesn’t mean it is right. This matters deeply in healthcare, law, and finance, where errors carry real-world consequences. So, while the temptation is to go all-in on GenAI, many organizations are pausing, reevaluating, and shifting strategy.

We don’t need less AI. We need better AI.

A Hybrid Approach to Complex Workflows

That’s where Responsible Hybrid AI steps in. These systems combine deterministic AI – based on sanctioned knowledge graphs – with governed GenAI and Robotic Process Automation (RPA). The deterministic side ensures accuracy (knowledge graph AI). The generative side brings creativity. And RPA ties it all together to execute tasks end-to-end.

Let’s be clear: this is not GenAI with a safety net. It’s AI with purpose-built scaffolding. Every answer is reviewed or labeled. No public web scraping. No off-brand tone. No untraceable actions.

Imagine asking about benefits. Hybrid AI retrieves verified information, logs the interaction, schedules a follow-up, and notifies HR – all without troubling a human. These are mundane tasks. They take time, and are repetitive. These are ideal processes for full AI automation. That’s the level of autonomy we’re heading toward. In some use cases, such as onboarding or NDA reviews, companies report a 40 – 60% reduction in response time. The promise and opportunities are real.

Counterpoints: Innovation and Flexibility Matter

Still, the Hybrid AI model isn’t without criticism.

One concern is innovation velocity. Deterministic systems, while safe, can be rigid. Their reliance on curated content and human approvals may slow agility – especially in fast-moving industries. But the rise of Trusted Collections and vector-based RAG (Retrieval-Augmented Generation) offers a fix. With intelligent fallback mechanisms like kama.ai’s Sober Second Mind®, answers outside the Knowledge Graph can be generated safely using internal content only – not the open web. That allows scale without sacrificing trust.

Another criticism is the claim of “zero hallucinations.” No system is perfect. Even deterministic content is subject to human error. However, systems like kama.ai’s can achieve near-zero hallucination because they separate sanctioned answers from AI-generated content. Then it is important to clearly identify the difference to the user. In high-stakes environments, this layered transparency matters more than blanket promises.

Adoption Challenges Still Exist

Even with all the advances, deployment isn’t simple. Informatica’s 2025 report shows that only 48% of AI pilots reach production. Even so it takes eight months on average to get them deployed. Over 80% of AI projects still fail, double the rate of traditional IT programs. Why? Often because the technology lacks governance, explainability, enterprise-grade integration, and adoption by employees.

This is where Hybrid AI excels. By using trusted internal content, verified knowledge graphs, and contained generative models, these systems are easier to audit, control, and scale. Roles are clearly defined – Knowledge Managers, IT leads, and SMEs all play a part. This shared governance model is what moves AI from pilot to production.

So, Is the Next Big Area of AI Complex Tasks?

Yes. But not just because AI can take on complex tasks. Because we’ve reached a point where that is the natural extension and evolution. This is the realm of AI agents bringing true productivity improvements to businesses. Enterprises are asking more of their digital systems. We need faster workflows, better compliance, consistent brand tone, and improved user experience.

However, the only way this leap succeeds is with structure, trust, and clear governance. That’s why Responsible Hybrid AI is emerging as the real answer. And, this isn’t just the case for today, but for the decade ahead as well. It’s not about replacing humans. It’s about delegating the mundane tasks. It’s about enabling smarter decisions, safer actions, and scalable automation.

In short: The next big area of AI isn’t about answers. It’s about action. And only those systems built for complex, trustworthy execution will lead the way.

Ready to move from chatbot to trusted teammate?

If you’re exploring AI for high-value workflows – HR, legal, customer service – it’s time to think beyond prompts. Let’s discuss how Responsible Hybrid AI can help you automate with confidence.