AI Benefits and Risks: Is Balance Better?

The rise of large language models (LLMs) is reshaping industries worldwide. These advanced AI models have brought incredible benefits. They are enabling companies to automate complex tasks, streamlining communication, and transforming content generation. With capabilities that range from generating coherent articles to simulating human-like conversations, LLMs have ushered in a new era of possibility. But while the benefits of AI are immense, the risks are equally striking—and in some cases, unsettling.

In recent days several articles have been published about this exact topic. Headlines scream:

- AI transcription tools ‘hallucinate,’ too

- AI Chatbots Ditch Guardrails

- AI-powered transcription tool used in hospitals reportedly invents things no one ever said

- OpenAI Research Finds That Even Its Best Models Give Wrong Answers

All these highlighting that we need to be vigilant, not purely trusting whatever our LLMs tell us. In fact, it emphasizes the continued importance of accuracy in AI responses. It also stresses the need for humans to be in the loop to ensure sound judgment on important decision points.

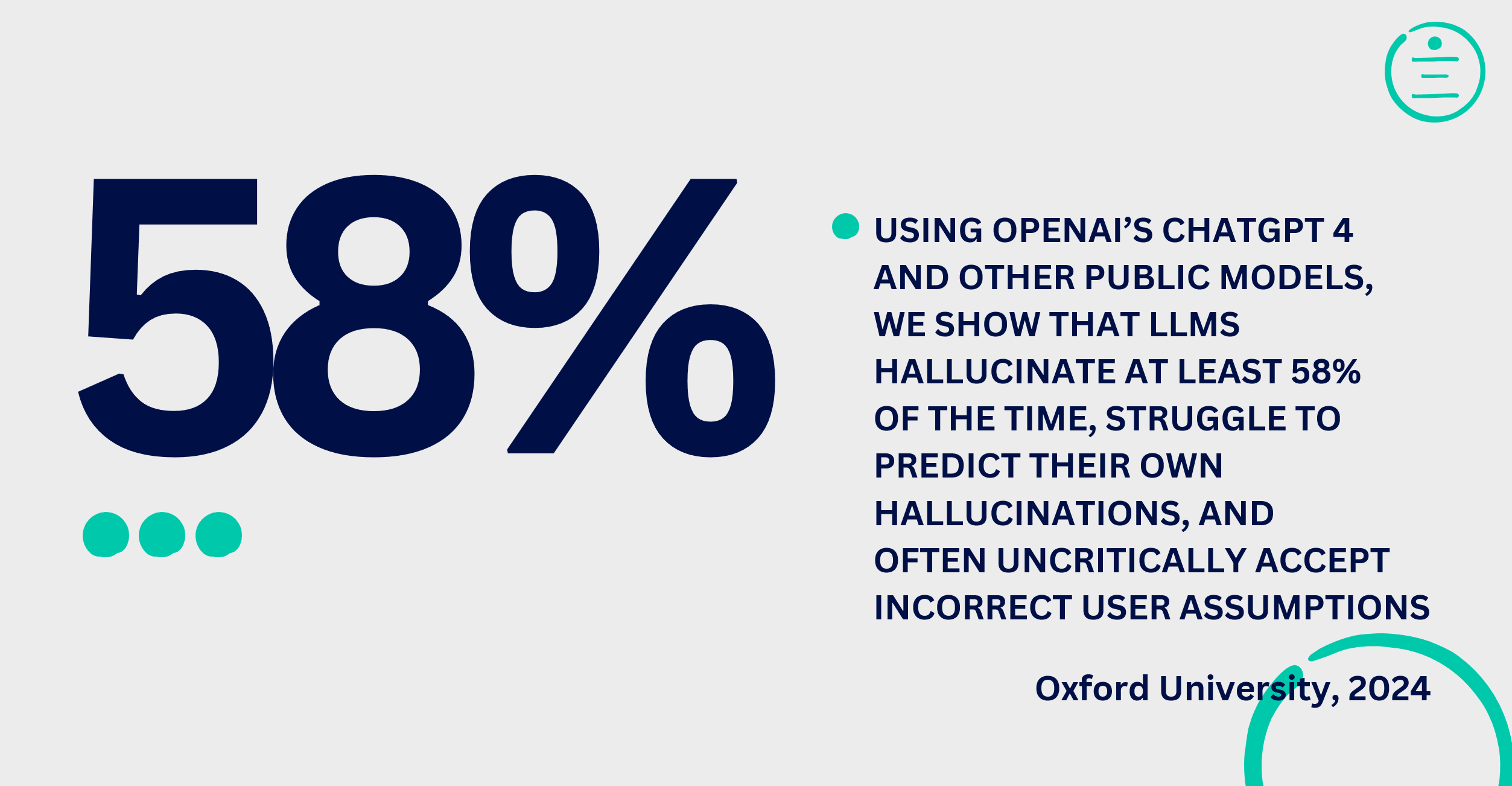

A 2024 study from Oxford University reveals just how significant these risks are, noting that LLMs “hallucinate” almost 58% of the time. This includes producing information that appears factual but is, in reality, completely fabricated. Supporting these findings, OpenAI’s own benchmark test of its o1-preview model shows an accuracy rate of only 42.7% (so 57.3% inaccuracies). OpenAI’s findings corroborate the Oxford University study. In effect, even the most advanced LLM models can produce more incorrect responses than correct ones. These error rates are especially alarming given how broadly LLMs are used. Today, they support medical professionals right through to assisting customer service representatives.

A 2024 study from Oxford University reveals just how significant these risks are, noting that LLMs “hallucinate” almost 58% of the time. This includes producing information that appears factual but is, in reality, completely fabricated. Supporting these findings, OpenAI’s own benchmark test of its o1-preview model shows an accuracy rate of only 42.7% (so 57.3% inaccuracies). OpenAI’s findings corroborate the Oxford University study. In effect, even the most advanced LLM models can produce more incorrect responses than correct ones. These error rates are especially alarming given how broadly LLMs are used. Today, they support medical professionals right through to assisting customer service representatives.

AI Benefits and Risks in High-Stakes Environments

The benefits of AI, including LLMs, are undeniable. According to kama.ai, a provider of responsible AI solutions, generative AI has grown rapidly since 2022. It has transformed daily life and has become a mainstream tool. It impacts everything from creative content generation to enterprise customer support. But, this fast-paced adoption comes with challenges. A 2023 KPMG study cited in kama.ai’s Responsible AI report found that “73% of people across the globe report feeling concerned about the potential risks of AI,” including privacy breaches, biased outcomes, and cybersecurity threats. In highly regulated industries like healthcare, finance, and law, these risks could lead to dire outcomes if AI-generated responses go unchecked.

The benefits of AI, including LLMs, are undeniable. According to kama.ai, a provider of responsible AI solutions, generative AI has grown rapidly since 2022. It has transformed daily life and has become a mainstream tool. It impacts everything from creative content generation to enterprise customer support. But, this fast-paced adoption comes with challenges. A 2023 KPMG study cited in kama.ai’s Responsible AI report found that “73% of people across the globe report feeling concerned about the potential risks of AI,” including privacy breaches, biased outcomes, and cybersecurity threats. In highly regulated industries like healthcare, finance, and law, these risks could lead to dire outcomes if AI-generated responses go unchecked.

For example, in healthcare, AI-driven transcription tools are increasingly used to document patient interactions. Yet recent studies show these tools are prone to hallucinations—making up medical conditions, drug names, and other critical details. In one documented case, a transcription system invented a medication, “hyperactivated antibiotics,” that doesn’t exist. For professionals relying on accurate patient data, errors like these could result in misdiagnoses or inappropriate treatments. This strongly underscores the risks of adopting AI without considering its accuracy focus, and use of responsible guardrails.

In fact, risks extend beyond hallucinations and transcription errors. LLMs have also proven vulnerable to manipulation through techniques like prompt injection attacks. A new “Deceptive Delight” method, developed by Palo Alto Networks, revealed that specific prompts could trick LLMs into bypassing their safety filters. This could lead to responses that were intended to be restricted. Such methods present a growing risk as these models become embedded in public and private systems alike. It is especially concerning for organizations equipping their chatbot with AI technology. Keeping it on-brand, with accurate, non-biased responses – is a brand imperative.

Human Oversight and Responsible AI

The Responsible AI framework, detailed in the Responsible AI PDF, emphasizes that when it comes to AI benefits and risks, transparency, human oversight, and ethical governance are essential to mitigate harm. “Responsible AI is an umbrella term for aspects of making appropriate business and ethical choices,” the report states. It goes on to highlight critical pillars like fairness, bias mitigation, explainability, and privacy. As more organizations integrate AI into their operations, ensuring these systems adhere to responsible practices is essential to building public trust. It’s also important to avoid reputational damage.

The Responsible AI framework, detailed in the Responsible AI PDF, emphasizes that when it comes to AI benefits and risks, transparency, human oversight, and ethical governance are essential to mitigate harm. “Responsible AI is an umbrella term for aspects of making appropriate business and ethical choices,” the report states. It goes on to highlight critical pillars like fairness, bias mitigation, explainability, and privacy. As more organizations integrate AI into their operations, ensuring these systems adhere to responsible practices is essential to building public trust. It’s also important to avoid reputational damage.

Moreover, the concept of “human-in-the-loop” AI—a process where human experts monitor and validate AI-generated outputs—has emerged as a crucial safeguard in high-stakes applications. For instance, kama.ai’s Responsible AI model uses human oversight to review responses generated by its conversational AI platform. This process lets businesses ensure that information provided to clients, stakeholders, and employees is accurate, aligning with brand values. A Deloitte study found that while “management of AI-related risks” is a top concern, only 33% of companies have integrated AI risk management into their broader risk frameworks. This gap points to an urgent need for responsible practices across sectors.

Complementing LLMs with Knowledge Graph AI

The limitations and vulnerabilities of LLMs don’t suggest that AI technology is inherently unreliable. Instead, these challenges highlight the importance of a balanced, diversified approach. Rather than relying solely on LLMs for every application, companies can use complementary AI technologies to reduce the risks and enhance the benefits of AI.

One promising alternative is Knowledge Graph AI technology. It focuses on structured data and emphasizes accuracy and transparency over generative capabilities. Knowledge Graphs are built on data structures that capture relationships and hierarchies, allowing for fact-based responses without the risk of hallucination. It is a slightly different AI technology, built as a complement to generative AI systems. Because they incorporate human input at critical stages, they align well with the Responsible AI framework’s which emphasize human-centered oversight. Knowledge Graph AI solutions let companies deliver information that is highly accurate, culturally sensitive, and adaptable to different contexts. This is a powerful contrast to the often unpredictable output of LLMs.

Where accuracy and reliability are paramount, such as in healthcare and finance, Knowledge Graph AIs offer a reliable, interpretable foundation. This technology provides a structured way to handle information. It ensures that data accuracy is prioritized and that human values and ethical standards are embedded into the core processes. Combining Knowledge Graph AI with LLMs lets organizations use the creative flexibility of GenAI while ensuring dependable and precise information delivery.

The Path Forward: Building a Diverse and Resilient AI Ecosystem

As AI becomes an essential part of our digital world, a narrow focus on one type of model—no matter how powerful—can expose organizations to unnecessary risks. When planting a new forest, you want to choose various different species, so it grows to be robust, and disease resilient. So too, an AI ecosystem reliant on a single model can open specific vulnerabilities and weaknesses. Instead, a diverse AI ecosystem including multiple technologies will help better support a wide array of applications with different needs for accuracy, creativity, and control.

LLMs, with their broad ability to generate diverse and engaging content, are a valuable tool in conversational roles. However, as the report on Responsible AI underscores, integrating Knowledge Graph AI and similar technologies provides built-in safeguards. These complementary models let companies avoid risks. This done while fully harnessing the benefits of AI to ensure their applications continue as reliable, accurate, and aligned with organizational values.

Ultimately, the promise of AI is indeed transformative. But we must proceed with both excitement and caution. As we celebrate AI’s potential, let’s remember the importance of balancing innovation with responsibility. Embracing a diverse AI ecosystem lets us navigate AI benefits and risks effectively. Consider fostering a technology landscape that is as resilient as a biodiverse forest and as dynamic as the world we live in.

There is a reason we say “If it’s got to be right, it’s got to be kama.ai”. Simply put, our Knowledge Graph AI technology is designed for accuracy, with your organization’s human values baked-in. If you need a system that protects your brand, and lets you sleep at night – then let’s talk.