Agentic AI vs Responsible Composite AI Agents

AI Agents are now at the center of enterprise technology conversations. Much of the discourse has focused on what exactly defines an ‘AI Agent’. Industry observers equate ‘AI Agents’ to Agentic AI. This is where the Agent inherently has its own reasoning and planning, and therefore its own ‘agency’. It has the ability to autonomously (independently) ‘do things’. It’s true that ‘agency’, from a definition standpoint, does mean having free will, and the ability to decide on one’s own actions and future. However, there is an interesting assumption being made here… that having agency is really what we want in our enterprise digital assistants. But on deeper reflection, is it?

Agentic AI vs Responsible Composite AI Agents

AI Agents are now at the center of enterprise technology conversations. Much of the discourse has focused on what exactly defines an ‘AI Agent’. Industry observers equate ‘AI Agents’ to Agentic AI. This is where the Agent inherently has its own reasoning and planning, and therefore its own ‘agency’. It has the ability to autonomously (independently) ‘do things’. It’s true that ‘agency’, from a definition standpoint, does mean having free will, and the ability to decide on one’s own actions and future. However, there is an interesting assumption being made here… that having agency is really what we want in our enterprise digital assistants. But on deeper reflection, is it?

AI Agents: A Balance of Utility and Agency

A more pragmatic view is that an AI Agent’s utility, or value to users and host organizations, should not be defined by the engine running under the hood. It isn’t about whether they possess ‘free will’. Rather, in an enterprise context, an Agent’s value should be defined by whether it can be trusted to complete the task it was given. A further test is whether it can deliver accurate and valid information in response to a user’s request or inquiry. It is important in the AI evolution to consider exactly what we want in an Agent. What we seek is independent actions and decision making, or human-trained and governed, trustworthy digital automation.

When a human asks for support from an AI Agent or digital employee, they are looking for a reliable system that will do the job effectively. It must deliver an outcome that adds value with trust and accuracy. If an Agent does so, then the methods and algorithms within the ‘black box’ are not important. This is true so long as it completes the job well with minimal risk to the user and host enterprise. Reliable and trustworthy outcomes are far more important than whether the actions were the result of fully autonomous analysis, a strict algorithm, reliable knowledge graph AI, or guard-railed large language model (LLM).

As AI Agents move closer to executing real actions, not just providing answers, this distinction becomes even more important. Wrong answers are troublesome. But wrong actions are far worse. They create significant financial, regulatory, and reputational risk. This concern is reflected in enterprise sentiments today. Enterprise leaders express anxiety around AI accuracy, reliability, and security. This is having a limiting effect on AI adoption, compromising the potential gains in productivity and customer satisfaction.

The hesitation to adopt autonomous AI Agents isn’t a resistance to innovation. Rather, it is a rational response to solutions that are increasingly positioned as autonomous decision-makers. Yet these decision-makers AI systems provide questionable reliability and fall short on guardrails and other human-centered governance capabilities. Ultimately, it is the host enterprise that will be held accountable for the results, whether in legal courts or in the court of public opinion. If there is a major issue that causes harm to an enterprise’s brand or reputation… it is the enterprise that shoulders the responsibility, NOT the AI Agent.

Gartner’s Observations

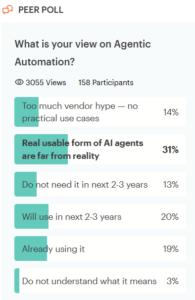

When asked about Agentic Automation, a recent Gartner Peer Poll showed that of the 158 participants 14% believe that Agentic AI is simply overhyped by vendors. Another 31% felt the ‘real usability’ of AI Agents is currently far from reality. A total of 58% of those surveyed simply do not have confidence in Agentic AI or in AI Agents of today. Shown here in the Gartner Peer Poll image. (source: Predicts 2026: The New Era of Agentic Automation Begins, 1 December 2025 – ID G00840857)

(source: Predicts 2026: The New Era of Agentic Automation Begins, 1 December 2025 – ID G00840857)

Further to this point, in June 2025, Gartner published a prediction that over 40% of Agentic AI projects would be cancelled by the end of 2027. In other words, there is an enormous sense of trepidation toward providing full autonomy, agency, and delegation of judgment to Agentic AI systems.

Probabilistic Agents: The Reason for Stalling AI Adoption?

Despite heavy marketing, many enterprise leaders remain skeptical about AI agent deployment. As expressed above, recent Gartner peer research shows that a significant portion of professionals believe usable AI agents are still far from reality. Others believe the market has been overhyped. Together, this reflects a deeper issue: trust has not kept pace with AI innovation and ambition.

Probabilistic, LLM-based (large language model) agents still suffer from hallucinations, inconsistent judgment and unreliable orchestration. While they are impressive creative tools when used to augment human tasks, they struggle with source discrimination and contextual reliability when operating as autonomous agents. Humans know to evaluate credibility, bias, and intent. Probabilistic systems have not been able to effectively emulate such judgment and deliver consistently reliable outcomes independently.

This gap explains why executives continue to cite lack of confidence as one of the top risks of Agentic AI. In high-stakes environments, uncertainty is unacceptable. Payroll, benefits administration, compliance workflows, and financial operations require exactness, and complete accuracy. Even small errors can cascade into major consequences.

As an example MIT published the GenAI Divide: State of AI in Business 2025 report in Jun 2025. In this report MIT specifically cites that, “Despite $30–40 billion in enterprise investment into GenAI, this report uncovers a surprising result in that 95% of organizations are getting zero return.” Part of the reason is the overhyped nature, or excessive promises made about Agentic AI systems. The research further suggests, “For organizations currently trapped on the wrong side, the path forward is clear: stop investing in static tools that require constant prompting, start partnering with vendors who offer custom systems, and focus on workflow integration over flashy demos. The GenAI Divide is not permanent, but crossing it requires fundamentally different choices about technology, partnerships, and organizational design.” Ultimately, using AI effectively within organizations, is about intelligently figuring out how to apply the system so it can make some human guided, and well governed decisions to improve processes and workflows.

If AI agents were to remain probabilistic-only, adoption will continue to stall. So, viewing AI Agents exclusively in the domain of probabilistic Agentic AI compromises the opportunity landscape. It limits the opportunity for automation, and return on investment with the technology available today.

Trust is Still Best Achieved with Human Experience

One way to understand human agency is through a real-world engineering example. When a team of engineers take on a complex project, they do not rediscover physics or derive equations from first principles and original thought. They rely on proven formulas, existing tools, and established systems. In Artificial Intelligence terms, it is analogous to Deterministic or Symbolic AI that follows pre-determined, human-governed rules and experience. Probabilistic AI approaches (pure LLM systems) attempt to solve complex problems by training systems on massive amounts of data. But the consistency and accuracy of these systems has yet to be proven.

Going back to the engineer analogy, no one questions whether an engineer has agency or utility simply because they use deterministic methods. In fact, reliability is the expectation and trust coming from human experience and science, accumulated over hundreds or even thousands of years. In our case, an engineer’s role is intelligent orchestration. They identify problems, apply known and trusted knowledge, experience, and judgement, and put them together to deliver a working system. That same principle should apply to AI Agents. Trust comes from the results provided, rather than from whether the system is deterministic or probabilistic (one technology versus another).

If trust and reliability is important, and it almost always is, an AI Agent should use proven tools. These tools must be appropriate for the task based on human experience, known approaches, and structured procedures.

Better still, using a Composite AI Agent approach, LLM creative skills (probabilistic) can also be engaged to deliver additional responses and information based on enterprise information. These can use GenAI Retrieval Augmented Generation (RAG) techniques. That is, with appropriately curated RAG source information, generative responses can augment human curated information with tight control. In this case, we choose the best documents and information to inform the generative responses when gaps exist in fully deterministic approaches.

Responsible Composite AI Agents with deterministic orchestration is an important option as an AI Agent. Such systems guarantee accuracy for low risk-tolerance needs that are demanded in strict enterprise governance environments. Agency is a tremendous power when it is held by beings that possess ethical values. Importantly, it also means having the ability to balance logic, experience, and judgement in critical contextual situations. But even then, humans with agency know their level of accountability and autonomy. They know when to engage peer experts, superiors, or even boards of directors. All this to balance decision-making with appropriate direction and governance on sensitive matters.

It is not to say that machines, or groups of machines, will never be able to achieve the level of balanced analysis, decision-making, and expert judgement discussed above. However, until Agentic AI approaches can achieve this autonomy reliably, human-trained deterministic orchestration provides safety. These AI Agents are safer, more reliable options for enterprise automation with digital autonomous employees or agents.

Enterprise Reality Demands Risk-Balanced AI Design

Enterprise environments are not uniform. Different tasks carry different risk profiles. Some workflows benefit from creativity and ambiguity. Others demand deterministic precision. Defining AI Agents as only those driven by probabilistic Agentic AI is a clear, and dangerous mistake for the current state of technology. It clouds our view of the opportunity availed by the innovative technologies that are here, and can be used today.

Organizations are quickly moving toward agent orchestration platforms. These typically exist outside an enterprise’s application stacks. It makes governance, transparency and reliability even more critical. Companies need to decide when AI agents should operate probabilistically and when they should function with deterministic orchestration. Doing so offers a better solution. Responsible Composite AI Agents can deliver the best of both worlds for trustworthy enterprise autonomy – today.

Beyond combining the best of breed automation technologies, integrating human-in-the-loop governance and human curated information is a critical part of the solution scope. High-risk tasks like payroll calculations, benefits changes, and compliance actions leave no room for hallucination or uncertain process orchestration. These workflows need processes that are approved-in-advance, predictable, auditable, and able to operate correctly every time. Failure to design AI Agents based on risk tolerance is why so many projects are failing. We need systems that can easily transition into production, with quick implementation phases, and which achieve clear returns on investment.

Responsible Composite AI Agents: Here Today

At kama.ai, AI agency is defined by execution and results, not ideology. The underlying technology matters less than whether the system can be trusted to complete the work. Generative AI is proving extremely useful in assisting human employees with research and generating drafts. However, when it comes to autonomous digital employees executing complex tasks, deterministic orchestration supplemented with generative RAG capabilities are far better options.

Responsible Composite AI Agents from kama.ai deliver this best-of-both worlds approach today. Deterministic knowledge graph AI, combined with Intelligent Automation (or RPA) deliver guaranteed process accuracy while enterprise knowledge graphs delivery 100% responsible policy, procedure, product and service information. Where gaps exist in human curated information, Retrieval Augmented Generation, or RAG, based on kama Trusted Collections provide the most responsible generative answers available. Human oversight, and continuous improvement cycles ensures the ongoing performance and capability expansion of deployed agents.

From both the user and the host enterprise perspectives, the internal mechanics are irrelevant – what matters is trust. If the task is completed correctly and safely, the agent has demonstrated true agency and value to the enterprise.

Future: Trustability – Not Probability

The future of AI Agents will not be decided by probabilistic purity – it will be decided by trust. Enterprises do not need louder promises or faster demos. They need systems that execute reliably under real-world constraints.

Composite AI with deterministic orchestration, utilizing the best tools available today is the foundation that makes agency and utility possible in high-risk environments. Probabilistic tools remain powerful when governed appropriately. Together, they form resilient, enterprise-ready systems. Refer to GenAI’s Sober Second Mind™ for a deeper and more detailed explanation of an ideal interplay between these two.

Responsible AI Agents are already available today. Organizations that value accuracy, accountability and return on their AI investment, are encouraged to rethink how agency is defined and how today’s technology can be combined to balance agency, utility, risk and ROI. Read more about this in the free ebook: Responsible Composite AI Agents.